Mapping the Hidden Networks of AI Harm

Preliminary web-based network graph – work in progress- https://ozgur.onal.info/AI-incidents/

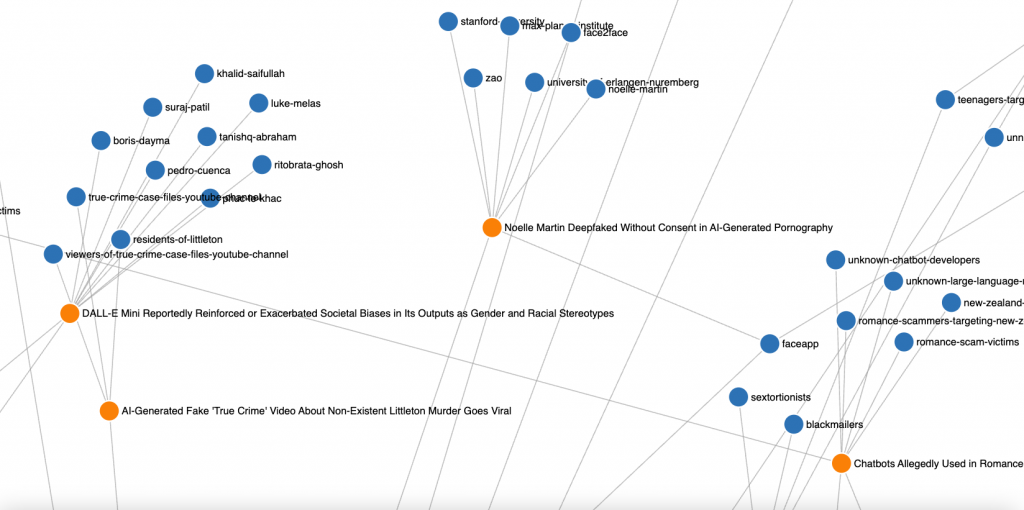

The ongoing study investigates the systemic structure of AI incidents by mapping over 1,000 real-world cases and 3,200 related entities into an interactive visual network. The objective is to identify hidden correlations, relational blind spots, and propagation pathways of harm within AI ecosystems.

Research Context

AI incidents—events where AI systems cause harm, behave unpredictably, or produce unintended outcomes—span categories such as performance failure, bias, discrimination, security breaches, and opacity in autonomous systems. While the frequency of such events is increasing, the larger patterns behind them remain underexplored. Current reporting mechanisms are fragmented, limiting the ability to observe systemic relationships.

Methodological Approach

A generative visualization model is under active development. Each incident is encoded as a dynamic particle, positioned and parameterized by its attributes: date, severity, involved entities, and domain. The simulation environment uses particle systems and algorithmic drawing to surface relationships that traditional visualization techniques obscure.

Work in progress includes:

- Testing graph layouts to balance relational clarity and systemic scale.

- Implementing clustering algorithms to reveal temporal and institutional connections.

- Evaluating color and motion as indicators of severity and propagation speed.

- Integrating external datasets to trace cross-sector linkages (e.g., company networks, regulatory actions).

Preliminary Observations

Early prototypes indicate recurrent interaction patterns between data suppliers, platform providers, and model deployers, suggesting harm propagation across shared infrastructure layers. The model also highlights temporal synchronization between certain incident types, implying possible structural coupling rather than coincidence.

Current Development Focus

- Refining the network’s force-directed simulation to accommodate new metadata dimensions (e.g., legal outcomes, ethical categories).

- Extending the taxonomy of incident types to include emergent categories such as synthetic media misuse and autonomous coordination failures.

- Developing a comparative layer for analyzing intervention effectiveness over time.

Next Steps

- Conduct parameter sensitivity tests to assess model stability and interpretability.

- Validate visual clusters through manual case analysis and cross-referencing with academic incident repositories.

- Prepare a mid-phase report outlining detected systemic vulnerabilities and recommendations for improved incident documentation standards.

Objective

The ultimate goal is to develop an open, adaptive research instrument capable of revealing the sociotechnical dynamics of AI failure—informing risk assessment, accountability mapping, and the design of preventive governance mechanisms.

AI incidents Dataset that I am working with:

Last couple of years, I was involving with the ethical dimensions of the AI and curating a AI Incidents relational network dataset based on open source resources, which documents real-world harms (or near harms) caused by the deployment of AI systems. Unprocessed raw dataset is from incidentdatabase.ai This database serves as a critical historical record to help learn from past experiences and mitigate future risks.

Also, a new dataset was released by MIT at https://airisk.mit.edu/. My plan is to merge and make a larger set for more accurate interrelational analysis.

Dataset Fields:

The structured dataset includes:

Incident’s title & Date (Unique identifiers and timeline of AI-related incidents) (more than 1K)

Description (Summarized narrative of the incident)

Entities: (morethan 3K Entities who are either deploying , developing or harmed by the AI system)

- Alleged Deployer & Developer

- Harmed or Nearly Harmed Parties (Affected companies ,individuals or groups)

Example Entities:

| ENTITY | AS DEPLOYER AND DEVELOPER | AS DEPLOYER | AS DEVELOPER | HARMED BY |

| 52 Incidents | 2 Incidents | 1 Incident | 1 Incident | |

| 46 Incidents | 0 Incidents | 12 Incidents | 4 Incidents | |

| Tesla | 43 Incidents | 0 Incidents | 5 Incidents | 3 Incidents |

| OpenAI | 41 Incidents | 0 Incidents | 52 Incidents | 10 Incidents |

| Meta | 27 Incidents | 2 Incidents | 4 Incidents | 2 Incidents |

| Amazon | 23 Incidents | 2 Incidents | 3 Incidents | 3 Incidents |

| Microsoft | 21 Incidents | 1 Incident | 8 Incidents | 10 Incidents |

| Apple | 14 Incidents | 0 Incidents | 2 Incidents | 3 Incidents |

| YouTube | 14 Incidents | 0 Incidents | 0 Incidents | 0 Incidents |

Preliminary web-based network graph – work in progress https://ozgur.onal.info/AI-incidents/

One sample Incident:

Title: Facebook translates ‘good morning’ into ‘attack them’, leading to arrest

Description: Facebook’s automatic language translation software incorrectly translated an Arabic post saying “Good morning” into Hebrew saying “hurt them,” leading to the arrest of a Palestinian man in Beitar Illit, Israel.

Harmed entities: Palestinian Facebook users , Arabic-speaking Facebook users