Model scope and objectives

The overall goal of the intent classification task is to develop a machine learning model that accurately identifies the intent behind natural language statements within the enteral tube feeding domain. The objective is to improve the efficiency and effectiveness of customer service interactions by automatically routing input sentences to corresponding flow’s dialogue initiator. To achieve this goal, the model must be trained on a large dataset of labeled examples that represent the various intents within the domain. The model should also be able to handle variations in language, syntax, and context that are common in natural language queries as well as spelling errors and typos. Ultimately, the success of the model will be measured by its ability to accurately classify statements with a good accuracy and f-score results which have been calculated with a batch testing procedure.

Technically, we can rephrase the problem as “Single label text classification task using transformers model for the purpose of intent classification”

Our classifier is a nutrition type and method agnostic.classifier model doesn’t focus on those 2 inputs. Attention is focused on the essential intention of the user independent from the nutrition type and method. But after the classification step we are using nutrition type and method information to map the intention to more specific user flows.

Methods of data collection

Per Case label:

- initial Sentences (base sentences)

~30-35 sentences based on the chatbot history of the Turkish version and existing flow/ topic.

- from google suggestions on the similar questions and field research

~30-35 sentences

- Synthetic data generation by chatGPT

20-25 chatGPT variations per base sentence above

Model Training

All sentences are being preprocessed to make them lower case first and also cleaning from unnecessary symbols and punctuations. Then we are sending it to a model to be trained with. We already use the BERT uncased model. so they are compatible. Before sending to prediction, we also make the input sentence lower case and then send it to prediction. the way we train and the way we predict are aligned.

Dataset Split

|

Set |

Sentence |

Percentage |

|

|

Train (Train) |

19,657 |

85% |

95% |

|

Train (Validation) |

2,185 |

10% |

|

|

Test (for batch test) |

1,149 |

5% |

5% |

|

GRAND TOTAL |

22,991 |

100% |

100% |

Datasets and their functions

|

Name of file |

purpose |

|

master.tsv |

Master dataset file |

|

my-test.tsv %5 |

Auto generated split for batch test |

|

my-train.tsv %95 |

Auto generated split to train the base model |

|

encoding.tsv |

Auto generated encoding file for the case labels that holds pairs of labels texts and their index numbers. |

|

vocab_word-frequency-remove.txt |

dictionary file to fine tune spell checking algorithm |

|

vocab-essential.txt |

dictionary file to use during outlier detection. It has the essential ,-DNA like-, words that defines the relevancy of the sentence. |

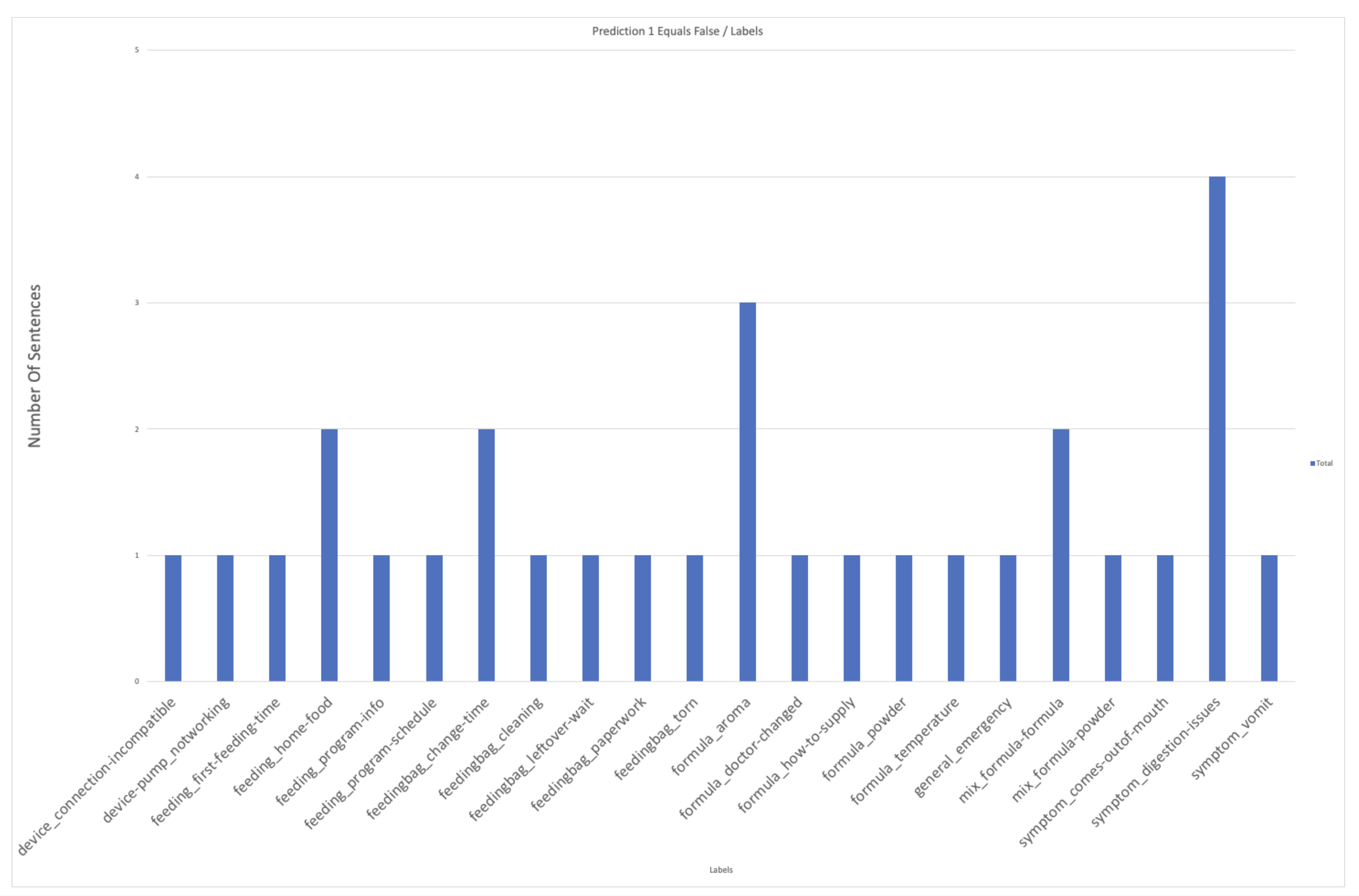

Which labels are in trouble?

chart shows the number of wrong predictions per case labels

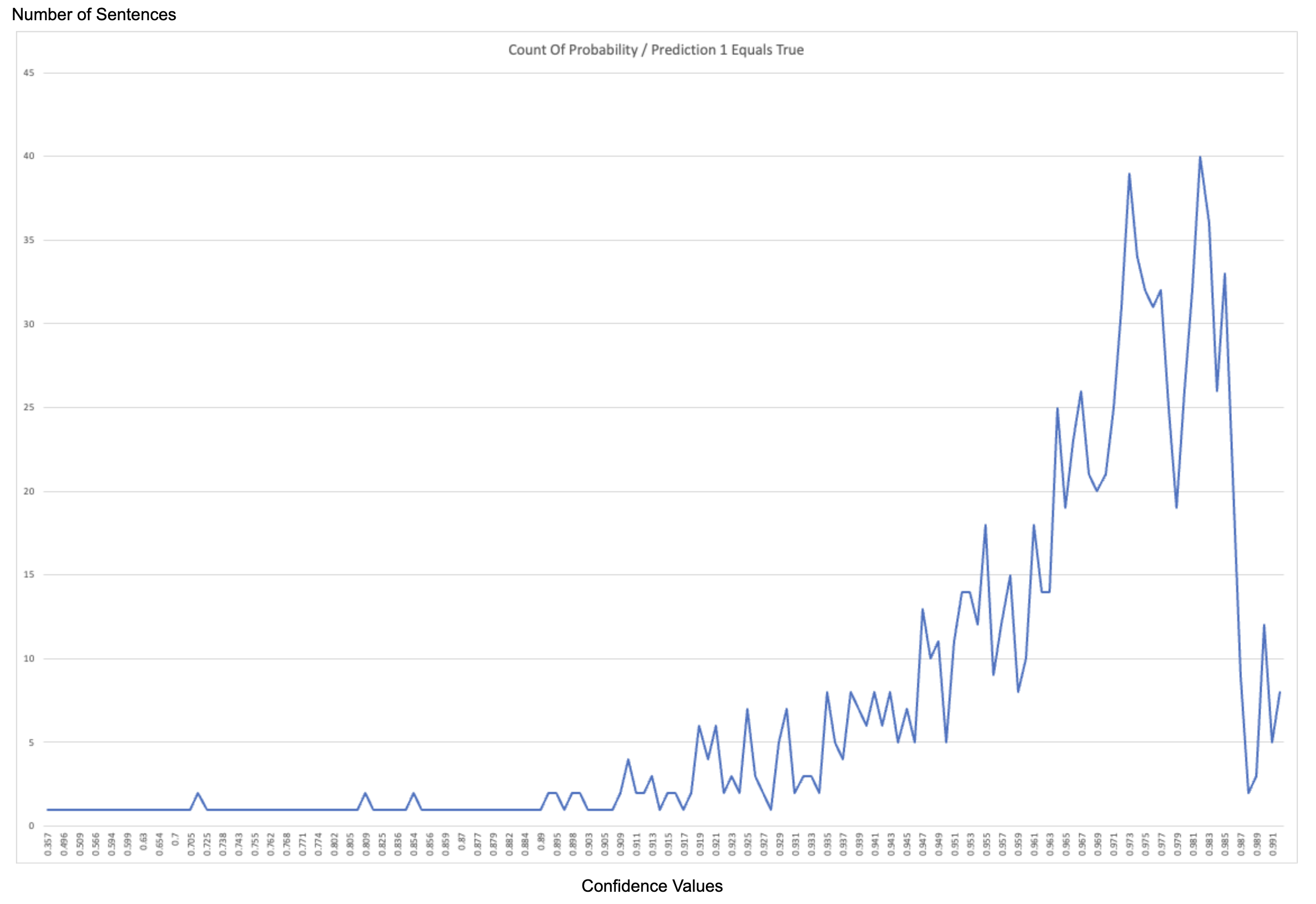

How confident are “correct” predictions?

Chart shows the number of sentences (y axis) per confidence value (x axis) for our correct (first) predictions.

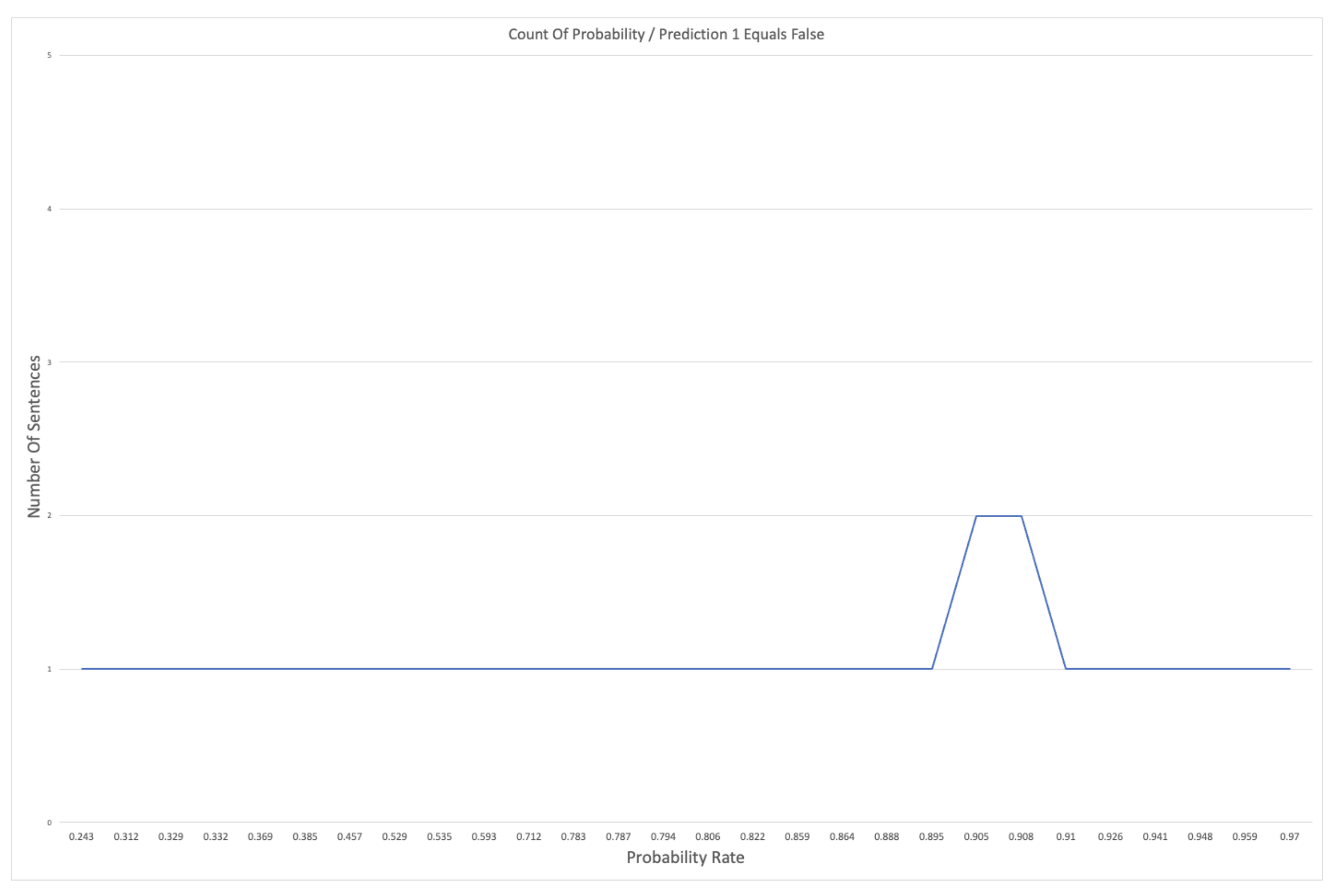

How confident are “wrong” predictions?

Chart shows the number of sentences (y) per confidence value (x) for our wrong (first) predictions.